library(tidymodels)regr-tree03

statlearning

trees

tidymodels

string

mtcars

Aufgabe

Berechnen Sie einfaches Prognosemodell auf Basis eines Entscheidungsbaums!

Modellformel: am ~ . (Datensatz mtcars)

Berichten Sie die Modellgüte (ROC-AUC).

Hinweise:

- Tunen Sie alle Parameter (die der Engine anbietet).

- Erstellen Sie ein Tuning-Grid mit 5 Werten pro Tuningparameter.

- Führen Sie eine

- Beachten Sie die üblichen Hinweise.

Lösung

Setup

library(tidymodels)

data(mtcars)

library(tictoc) # ZeitmessungFür Klassifikation verlangt Tidymodels eine nominale AV, keine numerische:

mtcars <-

mtcars %>%

mutate(am = factor(am))Daten teilen

d_split <- initial_split(mtcars)

d_train <- training(d_split)

d_test <- testing(d_split)Modell(e)

mod_tree <-

decision_tree(mode = "classification",

cost_complexity = tune(),

tree_depth = tune(),

min_n = tune())Rezept(e)

rec1 <-

recipe(am ~ ., data = d_train)Resampling

rsmpl <- vfold_cv(d_train, v = 2)Workflow

wf1 <-

workflow() %>%

add_recipe(rec1) %>%

add_model(mod_tree)Tuning/Fitting

Tuninggrid:

tune_grid <- grid_regular(extract_parameter_set_dials(mod_tree), levels = 5)

tune_grid# A tibble: 125 × 3

cost_complexity tree_depth min_n

<dbl> <int> <int>

1 0.0000000001 1 2

2 0.0000000178 1 2

3 0.00000316 1 2

4 0.000562 1 2

5 0.1 1 2

6 0.0000000001 4 2

7 0.0000000178 4 2

8 0.00000316 4 2

9 0.000562 4 2

10 0.1 4 2

# ℹ 115 more rowstic()

fit1 <-

tune_grid(object = wf1,

grid = tune_grid,

metrics = metric_set(roc_auc),

resamples = rsmpl)

toc()17.644 sec elapsedBester Kandidat

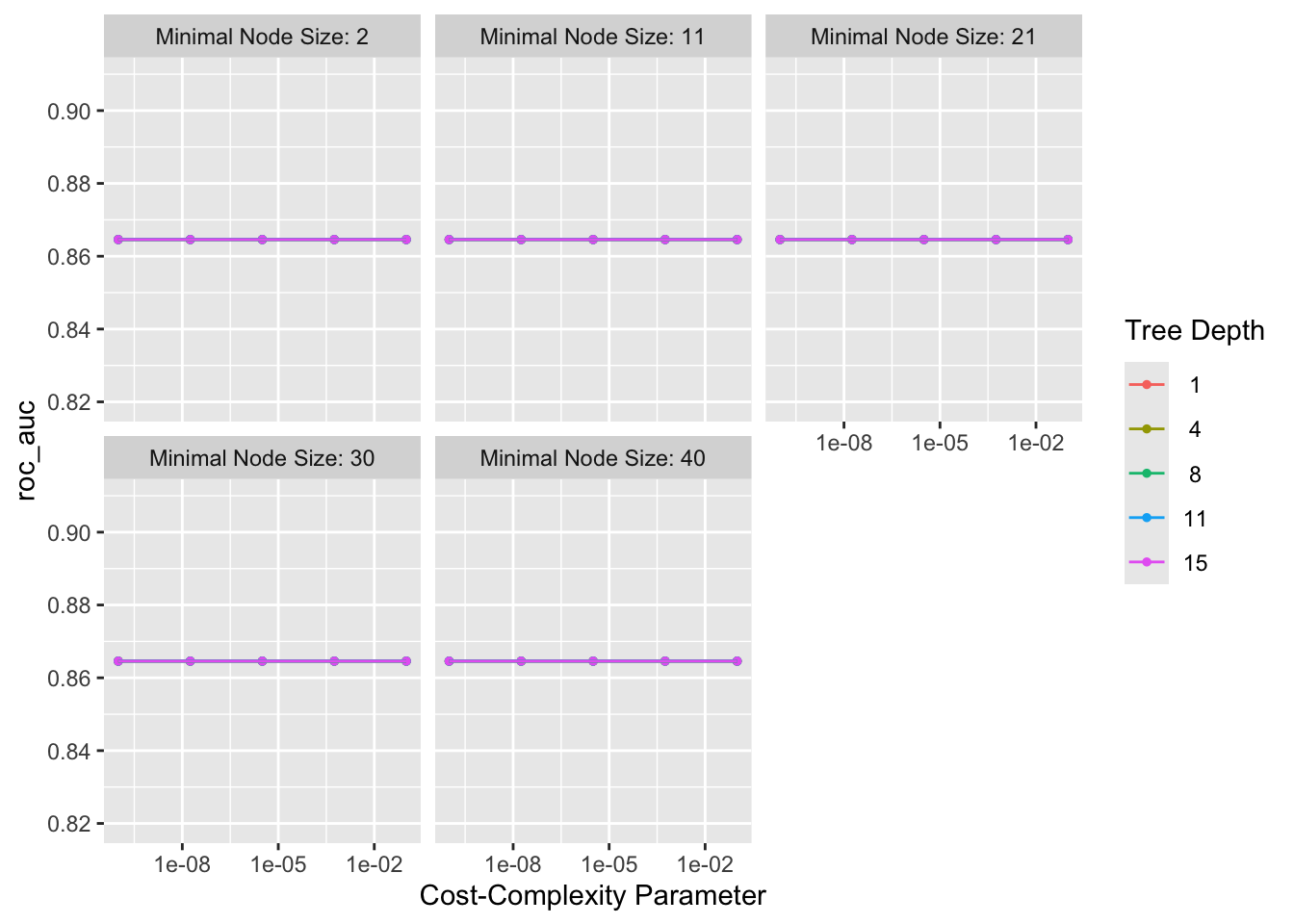

autoplot(fit1)

show_best(fit1)# A tibble: 5 × 9

cost_complexity tree_depth min_n .metric .estimator mean n std_err

<dbl> <int> <int> <chr> <chr> <dbl> <int> <dbl>

1 0.0000000001 1 2 roc_auc binary 0.865 2 0.0521

2 0.0000000178 1 2 roc_auc binary 0.865 2 0.0521

3 0.00000316 1 2 roc_auc binary 0.865 2 0.0521

4 0.000562 1 2 roc_auc binary 0.865 2 0.0521

5 0.1 1 2 roc_auc binary 0.865 2 0.0521

# ℹ 1 more variable: .config <chr>Finalisieren

wf1_finalized <-

wf1 %>%

finalize_workflow(select_best(fit1))Last Fit

final_fit <-

last_fit(object = wf1_finalized, d_split)

collect_metrics(final_fit)# A tibble: 3 × 4

.metric .estimator .estimate .config

<chr> <chr> <dbl> <chr>

1 accuracy binary 0.875 Preprocessor1_Model1

2 roc_auc binary 0.833 Preprocessor1_Model1

3 brier_class binary 0.132 Preprocessor1_Model1Categories:

- statlearning

- trees

- tidymodels

- string